GPT Vision - Learn how to use GPT-4 to analyze and compare images

May 04, 2024

In this tutorial, we're diving into GPT-4 with Vision, an exciting tool that lets the model analyze and interpret images.

With GPT-4 Turbo with Vision, the model can now handle images alongside text inputs, opening up new possibilities across different fields.

Traditionally, language models could only process text. But with GPT-4 with Vision, that's no longer the case. Now, the model can understand content more comprehensively.

Let's kick things off with a quick guide on how to provide images to the model, either through direct links or base64 encoded format.

We'll walk you through the process step by step, showing you how to ask the model about image content and understand its responses.

We'll also delve into the intricacies of image understanding with GPT-4, highlighting what it excels at and where it might struggle, like with detailed object localization.

Plus, we'll cover uploading options, including multiple image inputs and controlling image fidelity using the detail parameter.

Throughout, we'll stress the importance of knowing the model's strengths and limitations for different applications.

By the end, you'll be well-equipped to use GPT-4 with Vision to enhance your projects. Let's get started!

Kickstart Your Image Processing

Images are made available to the model in two main ways:

- by passing a link to the image

- or by passing the base64 encoded image directly in the request.

Images can be passed in the user, system, and assistant messages.

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-4-turbo",

messages=[

{ "role": "user",

"content": [

{

"type": "text", "text": "What’s in this image?"

},

{

"type": "image_url",

"image_url": {

"url": "https://ai-for-devs.s3.eu-central-1.amazonaws.com/example.png",

},

},

],

}

],

max_tokens=300,

)

print(response.choices[0])

This results in:

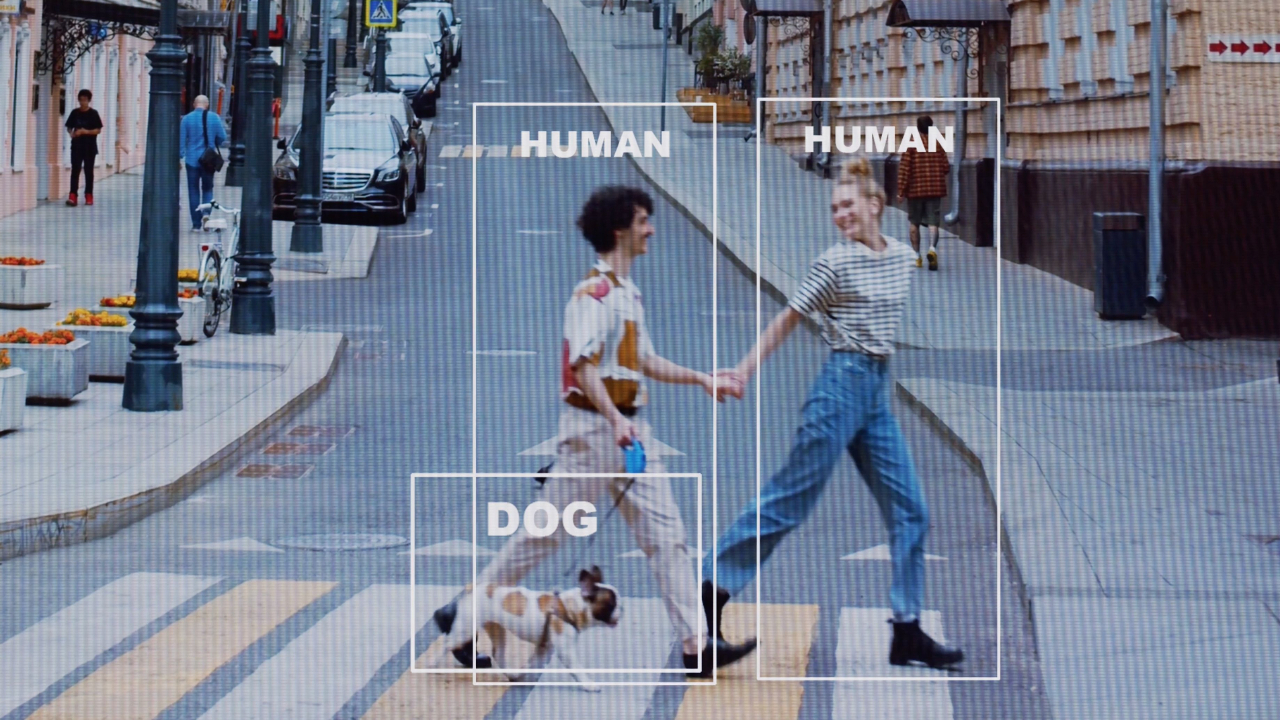

"This image captures a vibrant urban scene where a young couple is crossing the street while holding hands and smiling joyfully at each other. The man is wearing a light-colored outfit with tan pants and a white shirt, accessorized with a crossbody bag, while the woman has chosen a casual yet stylish look, pairing a striped top with high-waisted jeans. They are accompanied by a small dog, adding a cheerful touch to the scene. The background features a city street with cars parked on the side, pedestrians in the distance, and some city architecture, which helps create a lively, metropolitan atmosphere."

The model excels at providing general answers regarding the contents of images. It comprehends the relationship between objects but isn't finely tuned to answer intricate questions about object locations within an image.

For instance, it can accurately identify the color of a car or suggest dinner ideas based on fridge contents. However, if presented with an image of a room and asked about the location of a chair, its response may not be accurate.

How to Analyze Local Files?

If you possess images or a collection of images stored locally, you can transmit them to the model in base64 encoded format. Here's an illustration of how to implement this:

from openai import OpenAI

import base64

client = OpenAI()

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

image_path = "example.png"

base64_image = encode_image(image_path)

payload = {

"model": "gpt-4-turbo",

"messages": [

{ "role": "user",

"content": [

{

"type": "text",

"text": "What’s in this image?"

},

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_image}"

}

}

]

}

],

"max_tokens": 300

}

Enhanced Capability: Processing Multiple Image Inputs

The Chat Completions API is proficient in receiving and analyzing multiple image inputs, whether in base64 encoded format or as image URLs. It processes each image and integrates the insights from all of them to formulate a response to the query.

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-4-turbo",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "What are in these images? Is there any difference between them?",

},

{

"type": "image_url",

"image_url": {

"url": "https://ai-for-devs.s3.eu-central-1.amazonaws.com/example.png",

},

},

{

"type": "image_url",

"image_url": {

"url": "https://ai-for-devs.s3.eu-central-1.amazonaws.com/goat.png",

},

},

],

}

], max_tokens=300,

)

print(response.choices[0])

In this scenario, the model is presented with two identical copies of the same image. It can respond to inquiries about both images collectively or address questions about each image independently.

Improving Image Fidelity: Low and High Resolution Image Understanding

Controlling the detail parameter gives you precise control over how GPT-4 processes images. With options like low, high, or auto, you dictate the level of detail needed for your task.

In low-resolution mode, the model receives a simplified 512px by 512px version of the image, using only 65 tokens. This is ideal for quick responses and token efficiency.

Opting for high-resolution mode allows for a more detailed analysis. After initially viewing the low-res image, the model scrutinizes it in 512px squares, each consuming 129 tokens.

The default auto setting intelligently selects between these modes based on the image size, ensuring optimal processing for your needs.

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-4-turbo",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "What’s in this image?"

},

{

"type": "image_url",

"image_url": {

"url": "https://ai-for-devs.s3.eu-central-1.amazonaws.com/example.png",

"detail": "high"

},

},

],

}

],

max_tokens=300,

)

print(response.choices[0].message.content)

How To Improve The Performance

For prolonged conversations, it's advisable to transmit images using URLs rather than base64 encoding. This enhances the model's efficiency by reducing latency. Additionally, downsizing images beforehand to dimensions below the maximum expected size is recommended. For low-resolution mode, aim for a 512px x 512px image, while for high-resolution mode, ensure that the short side is under 768px and the long side is under 2,000px.

How are the costs calculated for image inputs in GPT-4?

Image inputs are subject to token-based metering, akin to text inputs. The token cost is contingent on two primary factors: the image's dimensions and the specified detail option. Images designated with detail: low incur a fixed cost of 85 tokens each. Conversely, detail: high images undergo resizing to fit within a 2048 x 2048 square while preserving aspect ratio. Subsequently, the shortest side is adjusted to 768px in length. The image is then divided into 512px squares, with each square incurring a cost of 170 tokens. An additional 85 tokens are appended to the final total.

Consider these examples to illustrate the concept:

-

For a 1024 x 1024 square image in detail: high mode, the token cost is 765 tokens. Since the image's dimensions are below 2048, no initial resizing is performed. With a shortest side of 1024px, the image is downscaled to 768 x 768. Consequently, four 512px square tiles are generated, resulting in a token cost of 170 * 4 + 85 = 765 tokens.

-

In the case of a 2048 x 4096 image under detail: high mode, the token cost amounts to 1105 tokens. Initially, the image is scaled down to 1024 x 2048 to fit within the 2048 square. Further adjustment reduces the shortest side to 768px, resulting in dimensions of 768 x 1536. The image is divided into six 512px tiles, leading to a token cost of 170 * 6 + 85 = 1105 tokens.

-

Finally, for a 4096 x 8192 image designated as detail: low, the token cost remains at 85 tokens. In this scenario, irrespective of the input size, low-detail images incur a fixed token cost.

Conclusion

GPT-4 with Vision is like giving your AI a pair of eyes. It can now understand images along with text, which opens up a world of possibilities. While it's great at answering general questions about what's in an image, it might stumble a bit on the nitty-gritty details. But hey, Rome wasn't built in a day, right? With a bit of know-how and creativity, you can tap into this visual understanding to take your projects to the next level. Let's keep exploring and pushing the boundaries of what AI can do!

Stay Ahead in AI with Free Weekly Video Updates!

AI is evolving faster than ever – don’t get left behind. By joining our newsletter, you’ll get:

- Weekly video tutorials previews on new AI tools and frameworks

- Updates on major AI breakthroughs and their impact

- Real-world examples of AI in action, delivered every week, completely free.

Don't worry, your information will not be shared.

We hate SPAM. We will never sell your information, for any reason.